Hospitals and health systems are jumping into artificial intelligence (AI) in an effort to help physicians better analyze images and other clinical data. But reporters should be careful about overstating the value that these new tools can bring to clinical decision-making.

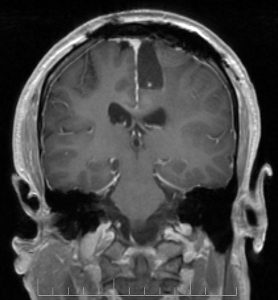

Radiology is the medical specialty probably most associated with AI today because of the tantalizing possibility that computers could help radiologists read images more quickly, enabling earlier diagnoses and treatment.

An often-reported example is the use of computer algorithms to improve early detection of lung cancer by helping radiologists spot and classify lung nodules.

Other AI applications for radiology are getting coverage. This spring, a radiology professor at Johns Hopkins Medicine made the news for his ambitious plan to build a tumor-detecting algorithm into CT scanner technology to help find earlier-stage pancreatic cancer.

However, rigorous, peer-reviewed study of AI programs is lacking. Earlier this month, the Alphabet-owned AI firm DeepMind published a study in Nature Medicine indicating that its AI system could recommend the correct referral for more than 50 eye diseases with 94 percent accuracy using routine eye scans. The study was conducted in partnership with Moorefields Eye Hospital NHS Foundation Trust, a leading eye hospital in London.

Jeremy Khan, senior tech reporter for Bloomberg, explained in a piece this month that the DeepMind results are unusual in that they appear in a peer-reviewed journal with rigorous study behind them. He reported that there had been only 14 peer-reviewed papers so far involving computer-vision software interpreting medical imaging. And there’s been only one peer-reviewed study of a prospective trial. Meanwhile, Kahn noted, the U.S. Food and Drug Administration (FDA) has approved 13 AI-enabled products.

Medical AI products are coming to market and being tested at hospitals all over the United States. For example, a computer program using an AI algorithm to analyze CT images for indications of stroke obtained FDA approval earlier this year.

Meanwhile, the AI rush to medicine continues. Even Facebook is getting into medical imaging research, Chrissy Farr of CNBC reported.

Following how other sectors of the economy are implementing AI can provide a reality check. This week, Sue Castellanos of The Wall Street Journal reported that Goldman Sachs and Morgan Stanley are keen to use AI to detect fraud and reduce trading errors but are finding limitations to current AI technology amid the hype.

“But when it comes to answering questions that need contextual understanding and financial acumen, AI algorithms are easily fooled and therefore it’s difficult to rely on them,” Castellanos reported.

A new AHCJ tip sheet, featuring an interview with Danton Char, M.D., of the Stanford University School of Medicine, offers some ethical questions to consider when covering AI in health care.