Tag

effectiveness

-

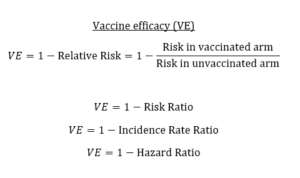

Know the nuances of vaccine efficacy when covering COVID-19 vaccine trials

I’ve written in previous posts about what to look for in COVID-19 vaccine trials and red flags to monitor. The…

-

•

Comparative Effectiveness Research Fellows named for 2019

Thirteen journalists have been selected for the 2019 class of the AHCJ Fellowship on Comparative Effectiveness Research. The fellowship program…

-

Comparative Effectiveness Research Fellows named for 2018

Eleven journalists have been chosen for the fourth class of the AHCJ Fellowship on Comparative Effectiveness Research. The fellowship program…

-

•

CJR: Be skeptical of miraculous study results

In the Columbia Journalism Review, Katherine Bagley urges journalists to use caution when reporting the results of medical studies, citing…

-

•

Op-ed: Obama’s quality metrics could be dangerous

On the Wall Street Journal‘s Op-Ed page, Jerome Groopman and Pamela Hartzband cite the shortcomings of a quality metric-based system…

-

Stimulus initiative stirs up controversy

In the Los Angeles Times, Noam N. Levey reviews the controversy that broke out when President Obama included money in…

-

Stimulus funds study of effectiveness of treatments

Robert Pear reports in The New York Times that $1.1 billion of the $787 billion federal economic stimulus package will…

-

•

Newer technology not always more effective

Joe Rojas-Burke reported in The Oregonian on what he called “a widespread problem in the health care system: the tendency…