Some teens and other people with mental health challenges have been turning to online platforms for emotional support from “caring strangers,” or at least that’s the idea. But a recent five-story series on Mashable.com by reporter Rebecca Ruiz exposed a host of problems associated with the platform 7 Cups, including the potential for manipulation of vulnerable youth by unqualified listeners and targeted abuse and harassment by trolls. Companion stories covered why online child exploitation is so hard to fight and why a contract between 7 Cups and the state of California was abruptly terminated.

In this “How I Did It,” Ruiz explains how one story that started as a way to explain how these types of platforms work evolved into an investigational series on the potential dangers of them.

The following conversation has been lightly edited for clarity and brevity.

How did you hear about 7 Cups? What inspired you to start this series?

I had been aware of 7 Cups for a couple of years because I cover digital mental health. I was familiar with their offering for paid therapy, and I knew that they did peer-to-peer interactions. In October 2022, I kept getting these pitches from companies in the same space, promoting essentially the same service — talk to somebody, feel less lonely, get emotional support. I thought this was kind of an interesting trend. I was familiar with peer support specialists and where people use their lived experience in combination with specific training to help other people. I thought if there’s a number of platforms offering something close to peer support specialists, this can be really empowering for people.

I started looking into that field thinking that I was going to do what we call a utility, which is to basically explain these new platforms, what they offer and how they work. I started talking to people who are using them, including users of 7 Cups, and started seeing negative experiences about 7 Cups in different forums like Reddit or Medium.

Then, I started doing some more searching around that and I found a University of California, Irvine, report on [the] Tech Suite [initiative] noting that 7 Cups had a multimillion-dollar contract with the state of California that had been terminated without explanation. That got my attention. I thought, “What happened there?” That’s where it all started.

Did you originally envision this as one story? Or did you go into it with the idea of a series?

I did not expect this to turn into a five-part series but frankly, it could have been longer. There was a lot more material that just didn’t necessarily make sense for our audience, like about the business models of these platforms. We realized that there were going to be at least two stories; one of them was going to be what happened in California. Later in the process, my editor wisely said let’s break up what’s happening on 7 Cups into distinct stories, one about teen safety and then another one about the possibility of being trolled, bullied or abused on the platform, and then a third story about what happened in California.

How did you find some of the sources, like people who used 7 Cups?

I did some work around social media, trying to identify people who publicly discussed some of the issues that were in the story. That is one avenue. There were people who cared deeply about 7 Cups’ mission and wanted to see it work who were involved in some way in the platform and had a range of emotions about what they found. Maybe they were sad and dismayed, or frustrated. I tapped into folks who were concerned and could open the door a little bit wider for me to make some more of those contacts.

What was important to you in telling these stories?

I’ve reported on mental health for more than a decade, and I’m very aware of the challenges that people face when they try to access mental health care, especially high-quality, affordable mental health care. I very much appreciated that these platforms were trying to address what they felt was a gap in the system. Not everybody needs or wants therapy. It seems like a really important effort to try to connect people with someone who cares and can support them.

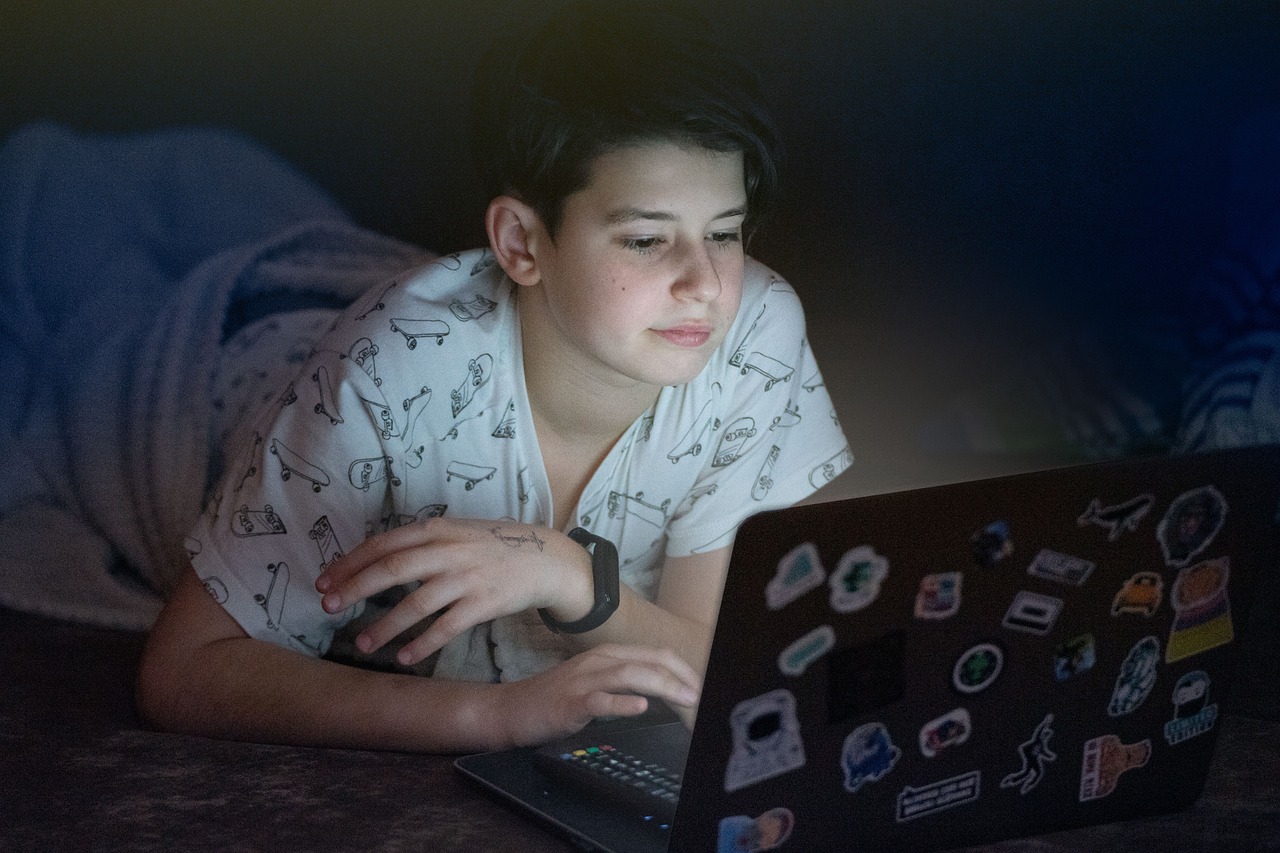

At the same time, one of my areas of expertise is in suicide prevention. When I learned that some people would go on 7 Cups and were vulnerable, and [were] being told, “you should go kill yourself,” I thought, these platforms can be misused by bad actors and probably cause more damage than benefit. Then you add in the child exploitation piece of it, where we have young people who are desperately trying to make a connection with someone who cares and understands them. They’re very vulnerable, and it’s not fair to them that they are trying to search for help in a digital landscape that wasn’t built for their safety.

I wanted to raise awareness about that possibility, and also [emphasize] we can’t assume that every technological or digital innovation in the mental health care space leads to positive outcomes for everybody. No matter how noble the intentions are, it’s still the internet.

What did you learn about the safety and verification of some of the users on platforms like this?

Given the tools that are available to these platforms, it ranges very widely. It depends on what the platform intends to do and what their business model is. There’s a lot of pressure on these platforms to scale up as quickly as possible so that they can compete in this larger marketplace where they’re trying to get business from employers or EAPs (employee assistance programs) or insurers, and you have to have a very large number of users in order to look attractive. There’s an incentive … to have less verification because it is a barrier to entry for users. The easier you can make it for users to join and engage, the bigger the scale that you have. I made multiple fake accounts on 7 Cups; I lost track of how many, both minor accounts and adult accounts. That wasn’t possible on other platforms, because the other platforms, for the most part, are not free. That is directly tied into the verification process. If you’re free, anybody can walk in the door.

What kind of feedback have you received about your series?

I got a note from a source that spoke with me, and they said that they never thought their voice would matter. That was the most impactful feedback that I got, in the sense that there were people involved in this who had tried, in their view, to change things to keep people safer and for whatever reason couldn’t be successful. Knowing that they felt that their voice had been heard in some way, and that someone had taken their concerns seriously, is what you hope for in many ways as a journalist.

I had a lot of people who are working in digital mental health care say thank you for putting a spotlight on some of the challenges, because this space is largely unregulated. There may be more regulation of these platforms by the FDA. But in the absence of rigorous regulation, there aren’t that many avenues for holding these platforms accountable other than journalism. We’re still doing some follow-ups on some leads that came out of the reporting, and there are still some things percolating, so there may be more to report in the future.