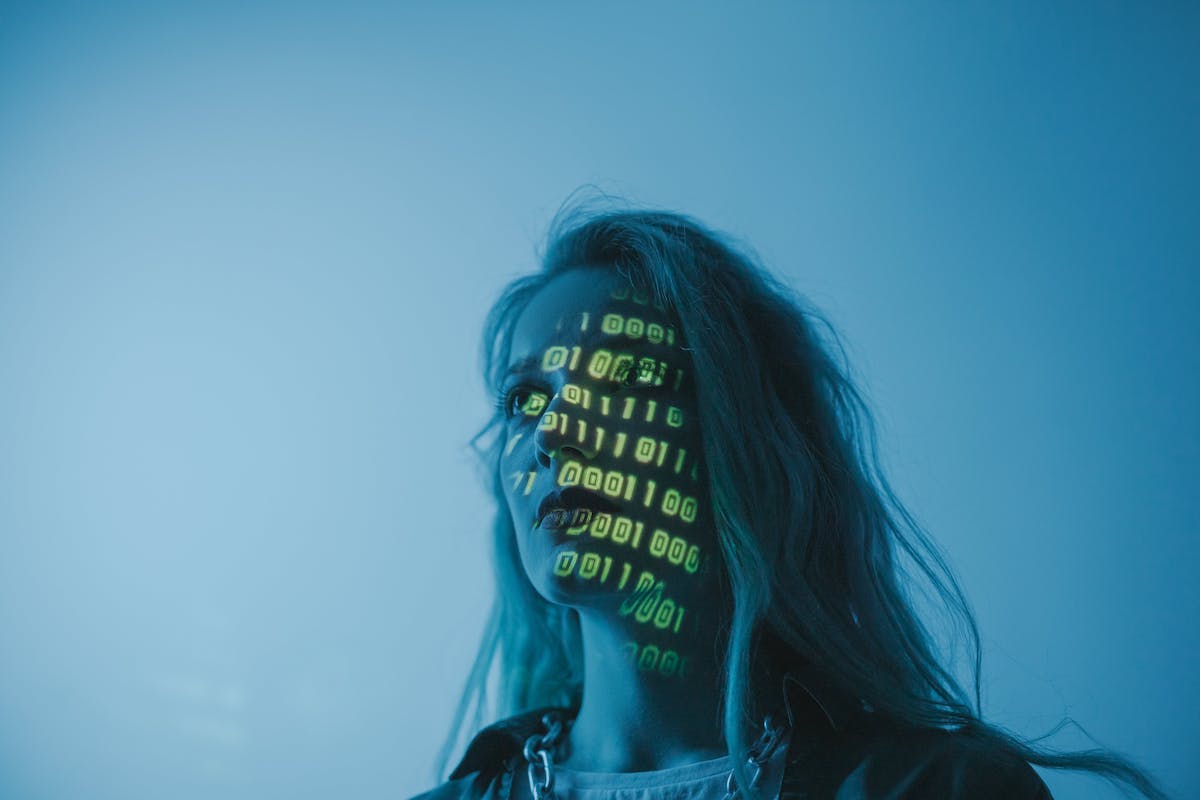

In September, the American Hospital Association warned hospitals and health systems to look out for cybercriminals who may impersonate their executives through the use of deepfakes, Becker’s Health IT reported. Deepfakes, synthetic forms of media, are created through artificial intelligence and machine learning programs to produce believable, realistic-looking but fake videos, pictures or audio.

The warning piggybacked on a notice issued by national security agencies about a rise in hackers using deepfakes to impersonate company executives to hurt their brands or try to gain access to their networks.

When the American Hospital Association declined my request for further comment, I reached out to Dean Sittig, Ph.D., a professor of biomedical informatics at the University of Texas Health Science Center at Houston, for help compiling this tip-sheet journalists can use to protect themselves and their readers.

What is a deepfake?

A deepfake is a computer-generated image, video or audio recording that never actually happened but looks or sounds like it came from a real person. Deepfakes are created through sophisticated editing techniques, such as putting someone’s face onto another body.

To create a deepfake moving image of a celebrity, one could gather thousands of images or video clips of that person from different angles, lighting conditions and expressions, then feed that data into a deep neural network — a computer model trained to recognize a person’s key features — and use them to generate convincing but false video, according to an April 2023 Salon article. These programs also allow users to manipulate a person’s mouth movements and voice to make it appear they are saying something they are not.

Why are deepfakes used?

Hackers could employ deepfakes to spread misinformation or try to gain access to secure information. In one case, a deepfake video of Ukrainian President Volodymyr Zelenskyy attempted to get Ukrainians to believe their military was surrendering to Russia. It has since been taken down.

Usually the criminals are just a little bit ahead of the people trying to detect criminal activity.

Dean Sittig, Ph.D.

In another case reported by the Wall Street Journal, criminals impersonated the voice of a United Kingdom-based energy company to demand a fraudulent transfer of $243,000 from an employee. When the criminals called back, the employee got suspicious.

Deepfake news segments also have been created that appear to be delivered by top journalists and TV networks, as Forbes reported in October.

What would be the advantage of using a deepfake to imitate a hospital executive?

These could potentially be used in a health care setting to get employees to give up passwords or access to health records; or to tarnish an institution’s reputation such as by saying the hospital isn’t safe and people should go elsewhere; or to tarnish other institutions’ reputations by saying bad things about them.

Hackers also could create a fake video of an executive doing something unsavory and threaten to release it unless they get money or something else they want. That scenario is less likely, Sittig said, as most hospital executives are not widely recognized or known.

How can you spot a deepfake?

If a video you see seems too outrageous, pause and do more research, an October Washington Post article recommended. Slow down while reading and watching, and don’t rush to forward the information on to others.

In a recent guidance document, the Department of Homeland Security offered these tips:

Look for the following signs when trying to determine if an image or video is fake:

- Blurring in the face but not elsewhere in the image/video.

- Change of skin tone near the edge of the face.

- Double chins, double eyebrows or double edges to the face.

- Lower-quality sections within the same video.

- Box-like shapes or cropped effects around the mouth, eyes and neck.

- Blinking or other movements that are unnatural.

- Changes in the background/lighting, or a background that doesn’t match the foreground.

Look for the following signs when trying to determine if an audio is fake:

- Choppy sentences.

- Varying tone inflection in speech.

- Phrasing: Would the speaker say it that way?

- Context of message: Is it relevant to a recent discussion or can they answer related questions?

- Contextual clues: Are background sounds consistent with the speaker’s presumed location?

To see if you could detect a fake, check out the Detect Fakes website created by the Massachusetts Institute of Technology (MIT) Media Lab, which provides several examples and asks you to decide if the videos and audio files are real or fake.

When someone makes a deepfake of you, and you think you deleted it, there’s probably copies out there that people made.

Dean Sittig, Ph.D.

As the technology improves, it may become more difficult to detect a fake, Sittig said. For example, early versions of deepfakes were more obvious to spot because the person featured didn’t blink. Once that came out, people improved the technology to allow blinking.

“Usually the criminals are just a little bit ahead of the people trying to detect criminal activity,” Sittig said.

How does someone delete a deepfake file of themself once it’s been released?

“That’s a lot harder,” Sittig said. There are some tools available through Google that supposedly can delete these from archives, and there are companies that will help clean up an online reputation for a fee, he added.

But there are so many places where files are copied, he said. “When someone makes a deepfake of you, and you think you deleted it, there’s probably copies out there that people made.”

What can the targets of deepfakes do?

Victims of deepfakes can contact law enforcement officials who may help by conducting forensic investigations, and people can report abuse on social media platforms using those tools’ reporting procedures. Incidents can also be reported to the FBI and its Cyber Watch at CyWatch@fbi.gov.

Where can I find expert sources for reporting on deepfakes?

There are a number of resources journalists can pursue, such as government agencies like the Department of Homeland Security, FBI and Cybersecurity Infrastructure and Security Agency; medical informatics groups like the American Medical Informatics Association; and professors of computer science and biomedical informatics.

The following organizations mentioned in the Homeland Security guidance work with victims of deepfakes and should have experts available for comment:

- Cyber Civil Rights Initiative, an organization that provides a 24-hour crisis helpline, attorney referrals and guides for removing images from social media.

- EndTAB (Ending Tech-Enabled Abuse), an organization that provides resources for education and reporting abuse.

- Cybersmile, a nonprofit anti-bullying organization that provides expert support for victims of cyberbullying and online hate campaigns.

What are some untapped story angles journalists could pursue?

“Everyone talks about the bad uses of deepfakes, but what about the good uses?” Sittig said.

One study demonstrating beneficial uses of deepfakes came out of researchers in Taiwan, who trained a computer system on thousands of facial expressions to create videos of synthetic patients to train physicians on how to improve their empathy and ability to interpret facial expressions when interacting.

Resources:

- AHA warns ‘deepfakes’ could impersonate hospital execs — article from Becker’s Health IT.

- Increasing Threat of Deep Fake Identities — guidance document from the Department of Homeland Security.

- ‘A.I. Obama’ and Fake Newscasters: How A.I. Audio is Swarming TikTok — article from the New York Times.

- How to avoid falling for misinformation, AI images on social media — article from the Washington Post.

- In A New Era of Deepfakes, AI Makes Real News Anchors Report Fake Stories — article from Forbes.

- Deepfake videos are so convincing – and so easy to make – that they post a political threat — article from Salon.com.

- AI-Generated Voice Deepfakes Aren’t Scary Good – Yet — article from Wired magazine.