At the American Academy of Pediatrics annual meeting last fall, I attended a talk by Kevin Powell, M.D., Ph.D., called “Evidence-Based Medicine in a World of Post-Truth and Alternative Facts.”

At the American Academy of Pediatrics annual meeting last fall, I attended a talk by Kevin Powell, M.D., Ph.D., called “Evidence-Based Medicine in a World of Post-Truth and Alternative Facts.”

Despite the title’s allusions, however, the talk did not discuss problems in communicating science or medical findings in today’s media ecosystem. Rather, Powell argued that many of the problems we see in today’s problematic reporting and “fake news” have long existed in medical research — but there are ways to address those problems.

Powell’s ideas are not new. He cited multiple other academic critics who have raised concerns about the way competing pressures and motivations in research — such as the expectation of significant results, the devaluing of negative results, the requirements for tenure and the overall publish-or-perish environment — can taint research.

Perhaps the best known of these critics is John Ioannidis, Ph.D., a professor of medicine and statistics at Stanford University, whose 2005 watershed article “Why Most Published Research Findings Are False” continues to be among the most cited of all research articles. But more than a decade before Ioannidis’s article, Doug Altman, a professor of medical statistics at Oxford, had similarly called attention to “The scandal of poor medical research” in BMJ in 1994. And the then-editor of BMJ, Richard Smith, returned two decades later to lament the lack of progress since Altman’s article in his own commentary in 2014, “Medical research — still a scandal.”

Their critiques mirror one another, highlighting especially the proliferation of low-quality observational studies — the same ones that frequently end up in headlines and contribute to reader fatigue of “changing” research findings. The only thing surprising about Altman’s conclusion is that he said it a quarter century ago and yet it remains even more true now, in the glut of daily research, than was then: “We need less research, better research and research done for the right reasons. Abandoning using the number of publications as a measure of ability would be a start.”

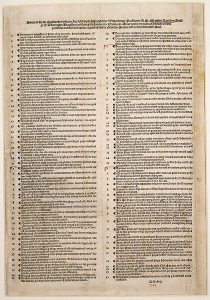

Powell’s presentation was not a rant, however. It was a hopeful call to arms, as he discusses in a video that accompanies the article I wrote about his session for Pediatric News. He concluded with his “9.5 Theses for a Reformation of Evidence-Based Medicine,” a riff on Martin Luther’s 95 theses that started the Protestant Reformation, since the reverence so many hold for medical research may ironically hold it back as reverence for tradition prevented reform in the Catholic Church.

The 9.5 theses are intended for physicians and researchers, but they also work effectively as guidelines for journalists reporting on medical research, pointing to the numbers and findings journalists should look for and report:

1 – Recognize academic promotion as a bias, just like drug money.

2 – Don’t confound statistically significant and clinically significant.

3 – Use only significant figures.

4 – Use the phrase “we did not DETECT a difference” and include power calculations.

5 – Use confidence intervals instead of P values.

6 – Use Number Needed to Harm and Number Needed to Treat instead of relative risk.

7 – Absence of proof is not proof of absence. When there is insufficient randomized controlled trial evidence, have an independent party estimate an effect based on non-RCT articles.

8 – Any article implying clinical practice should change must include counterpoint and a benefit cost analysis. Consider both effectiveness and safety.

9 – Post-marketing peer review

9.5. – Beware of research based on surveys.