At some point almost all health care journalists will need to cover a medical study or two. When that happens, you’ll want to have at least a passing understanding of p values and statistics and you’ll need to know that correlation does not imply causation.

For a session on May 2, AHCJ’s medical studies topic leader Tara Haelle moderated a panel, “Begin mastering medical studies.” Haelle and two experts in the topic explained some of the finer points of covering studies: Ishani Ganguli, M.D., an assistant professor of medicine at the Harvard Medical School, and an internal medicine physician at Brigham and Women’s Hospital; and Regina Nuzzo, Ph.D., a freelance journalist and professor of science, technology and mathematics at Gallaudet University.

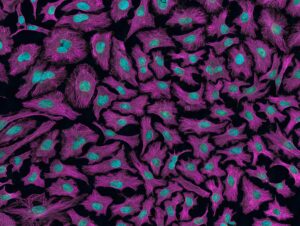

To introduce the topic, Haelle explained that we should recognize that preclinical studies are different from clinical trials. As the name implies, preclinical work involves research done before researchers begin the clinical study phase.

“These are the ones that you generally want to avoid,” Haelle commented. There are two types of preclinical studies: In vitro studies, which are done in labs or petri dishes, for example; and in vivo studies involving animals, such as mice or rats.

From there, Haelle outlined the five phases of clinical trials:

- Phase 0 is exploratory in which researchers attempt to determine if a drug will behave in humans as it does in animals and whether more research is needed.

- Phase 1 is the first test intervention in a small group of patients to assess safety, the dosage range and any side effects.

- Phase 2 is designed to study safety and effectiveness in a larger group.

- Phase 3 is conducted with larger groups, and again, the researchers are studying safety, confirming whether the medication is effective, assessing side effects and comparing the medication against other drugs.

- Phase 4 is done after the FDA has approved the drug and is conducted on different populations to assess any long-term side effects.

Almost all clinical trials involve an interventional study, in which researchers assess drugs, devices, products, procedures, screenings, and behavioral interventions. In these studies, results are compared to that of a control group.

Following Haelle’s description of the types of trials, Nuzzo and Ganguli offered warnings for journalists to consider.

When assessing claims in a clinical trial, Nuzzo suggested journalists should be skeptical. All researchers should verify their claims with data, she said, particularly those who make unusual claims. Also, researchers should be able to replicate their results in a follow-up study, she added.

Sometimes researchers will not report all results or explain how they did their analysis, Nuzzo commented. At other times, researchers will change how they do their analysis and results. Such changes could mean the researchers were trying to make their results look better than they were. “All the analysis and the results need to be presented no matter what the outcome is,” she said.

To verify whether the analysis or results were revised after the study began, journalists can compare the study design at www.clinicaltrials.gov with the final results and analysis as published, Haelle explained. At the clinical trials site, the National Library of Medicine maintains a database of trials that are funded by private and public sources around the world. Note that simply including a trial in this database does not mean the federal government has evaluated the study.

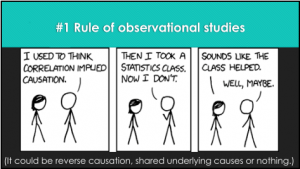

Nuzzo also warned that many studies are incorrect or the design is reconfigured to make the results look better. “Half of studies are wrong or are p-hacked,” she said. P-hacking happens when researchers select data or do a statistical analysis that makes insignificant results appear to be significant, according to this PLOS article.

Some consider the p-value to be a measure of confidence in the data in question, but that’s an oversimplification of the complex issues surrounding p-values. For a more thorough examination of the topic, Nuzzo referred to an article, “Scientific method: Statistical errors,” she wrote for Nature. The subhead sums it up nicely, “P values, the ‘gold standard’ of statistical validity, are not as reliable as many scientists assume.”

During Ganguli’s presentation, the Harvard professor explained why health services research is worth covering. Those who do health services research examine how people get access to health care, how much care costs, and what happens to patients as a result of care. The goals of this research are to identify the most effective ways to organize, manage, finance and deliver high quality care; reduce medical errors; and improve patient safety.

When health care journalists need to decide whether to cover a study, Ganguli suggested asking if the study would fill a gap in researchers’ knowledge. For example, is the study actionable and have others done similar research? Look for reliable sources of such studies, such as those at PubMed, the Kaiser Family Foundation or the Commonwealth Fund, she suggested.

To determine if a study is actionable, read the objective and background sections in the abstract. Also, she recommended reading the footnotes to get an idea about other researchers who have written about the subject and which aspects of the issue have they uncovered.

For more on this topic, read some of the tip sheets Haelle has written and collected on this page. In particular, check out these two: